A software ecosystem based on European technologies

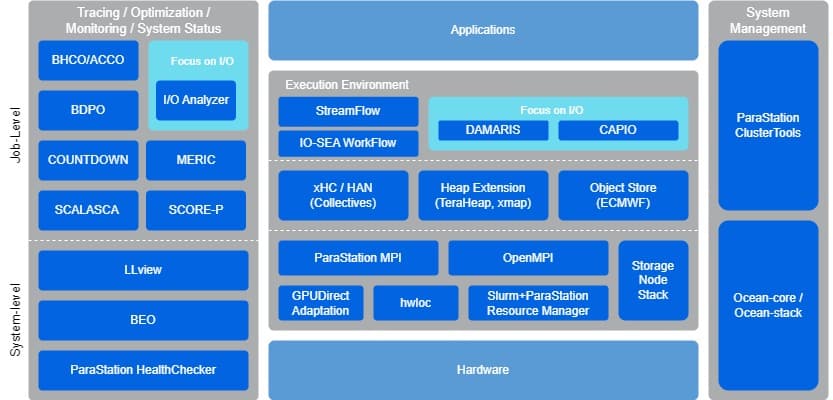

One of the objectives of EUPEX is to provide a software ecosystem for the pilot based on European technologies. Its design will not only take into consideration the needs of the key applications identified in EUPEX, but also those of the system operators for the management of large-scale Modular Supercomputing Architecture (MSA) systems.

The EUPEX software stack addresses 4 objectives

Management

Define a management software stack to support the administration of modular systems while being versatile enough to meet the requirements of of upcoming architectures.

More information on the Management software stackExecution environment

Integrate different components forming the execution environment that will enable the efficient utilisation of all available resources on the modular architecture of the EUPEX platform.

More information on the Execution EnvironmentTools

Provide a set of tools to aid application developers as well as system operators to optimise the efficiency with respect to performance and energy, i.e., to maximise system utilisation.

More information on ToolsStorage architecture

Define a multi-tier storage architecture to meet the I/O demands of large-scale MSA systems, to transparently integrate fast storage technologies, and to minimize data movement.

More information on the Storage Architecture