Defining a multi-tier storage architecture to meet the I/O demands of large-scale MSA systems, to transparently integrate fast storage technologies, and to minimize data movement.

IO-SEA

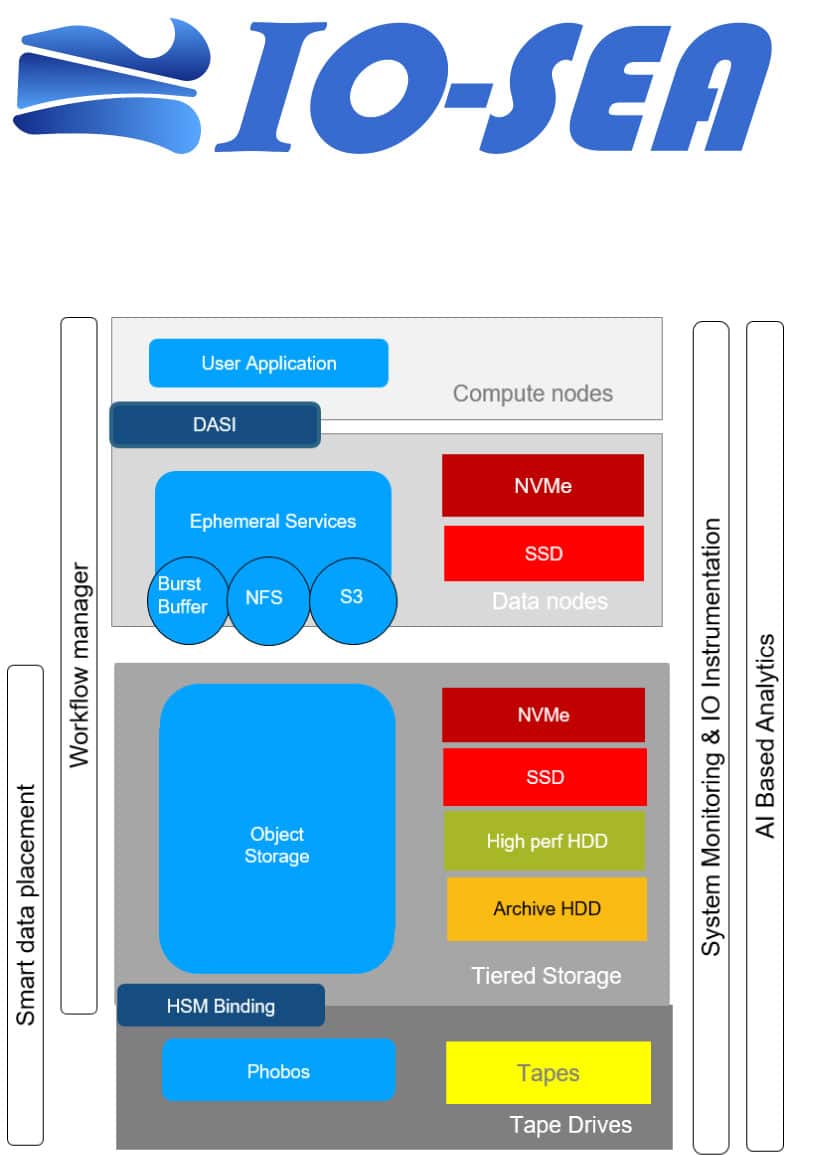

IO-SEA is a full storage system software stack, merging the outcomes of several other open source pieces of software. The Phobos object store provides a scalable and capacitive storage with extended HSM features via the generic HESTIA API and the Robinhood policy engine. IO-SEA provides extended storage scalability by introducing ephemeral services, a new inference level in the storage stack, as temporary servers dedicated to computes jobs. Those ephemeral services are dynamically created on data nodes close to the compute nodes, making thus I/O operations less expensive in terms and network resources and energy. Ephemeral services are managed via the Workflow Manager, and exists as different flavours including smart burst buffers and NFS servers (via NFS-Ganesha). IO-SEA includes an high level API, the DASI (Data Access Storage Interface) which helps end user addressing object storage in a intuitive way, with a semantic close to the user’s scientific domains semantics. The whole stack has advanced instrumentation and monitoring features, feeding a database whose content is used to run the Recommendation System, able to advice optimisation via AI frameworks.

The EUPEX Pilot will showcase some of the IO-SEA features.

Responsible partner: CEA

CAPIO (Cross Application Programmable I/O)

CAPIO is a user-space middleware that can enhance the performance of scientific workflow composed of applications that communicate through files and file system’s POSIX APIs. CAPIO is designed to be programmable, i.e., it allows the definition of cross-application parallel data movement streaming patterns and the definition of parallel in-situ/in-transit data transformation on these data streams. Data-movement programming complements the application workflow by providing the application pipeline with cross-layer optimization between the application and the storage system. The user provides a JSON file in which for each file or directory there is written which application produces and reads them, how the communication is performed (e.g., scatter, gather, broadcast), and eventual in-situ or in-transit data transformation. Besides, CAPIO enables streaming computation while still using the POSIX I/O APIs. CAPIO automatically transforms synchronous POSIX-based I/O operations into asynchronous operations removing the file system from the critical path of the computation. This feature improves the performance of scientific workflows that are typically organized as a data-flow pipeline of several components where each one can start only when the previous stages have generated all its input data.

Responsible partner: CINI – University of Pisa and University of Turin

Damaris

Damaris is a middleware for I/O and data management targeting large-scale, MPI-based HPC simulations. It enables asynchronous in-situ or in-transit access to simulation field data. It has connectors to HDF5 for writing data, VisIt and Paraview for in-situ visualisation and Python and Dask for in-situ data analytics and users have the flexibility of writing their own plugin code.

In EUPEX, Damaris is to be provided as part of the system user software stack.

Responsible partner: Inria

KV store (Parallax/Tebis)

Parallax is an LSM-based persistent key-value store designed for flash storage devices (SSDs, NVMe). Parallax reduces I/O amplification and increases CPU efficiency using the following mechanism. It categorizes key-value (KV) pairs into three size-based categories: Small, Medium, and Large. Then it applies a different policy for each category. It stores Small KV pairs inside the LSM levels (as RocksDB). It always performs key-value separation for KV pairs (as BlobDB), writing them in a value log, and it uses a garbage collection (GC) mechanism for the value log. For medium KV pairs, it uses a hybrid policy: It performs KV separation up to the semi-last levels and then stores them in place to bulk-free space without using GC. Tebis is an efficient distributed KV store that uses Parallax as its storage engine and reduces I/O amplification and CPU overhead for maintaining the replica index. Tebis uses a primary-backup replication scheme that performs compactions only on the primary nodes and sends pre-built indexes to backup nodes, avoiding all compactions in backup nodes.

Role in Eupex: High performance key value store for HPC workflows and applications with small data items, updates, and range queries.

Responsible partner: FORTH

FastMap

FastMap is a next-generation memory mapped I/O path (buffered/direct read/write system calls also supported for completeness), implemented in the form of a Linux kernel module. It utilizes its own page cache separate to the kernel provided cache and supports the usage of 2MB hugepages over block device backed data. FastMap addresses issues present in current memory mapped I/O implementations with regard to a) scalability and software overheads, b) overheads stemming from synchronous operations in kernel space, and c) processing overheads/device underutilization issues as a consequence of page granularity.

FastMap addresses these issues with a) a scalable metadata architecture centered around per-core structures, b) support for asynchronous and transparent page prefetching and hugepage promotion, and c) the usage of separate regular page and hugepage allocation/management pools.

Role in EUPEX: Fast memory-mapped I/O capabilities for use in the storage I/O path and for supporting large heaps over NVM and Flash-based SSDs.

Responsible partner: FORTH